Han Yang's Portfolio

A mini realtime-rendering engine

This is a project mainly based on c++ and OpenGL. Intend to implement the basics tricks of forward and deferred shading.

This project is a group work, where my job is to coordinate the team and implement the parts of code related to rendering (mainly including writing shaders, setting up the context for shaders, and dispatching shaders to run).

GitHub repo:

(Use preprocessor definition "DEFFERRED_SHADING" to build deferred shading executable)

Video demo of forward shading only:

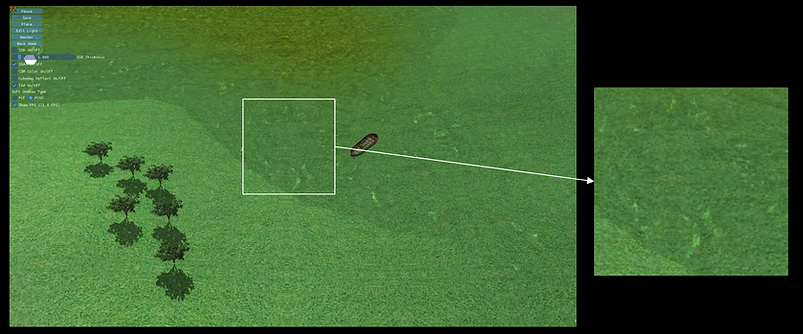

Terrain and Editing

For the terrain, I used a chunk-based design. Each chunk can decide whether it is generated based on Perlin noise or a height map. And I used a blender map to blend different textures on the terrain.

Three different textures for blending

Blending result

Using imgui, we have implemented a simple GUI for scene editing and mode selection.

We can select every object in the scene by using raycasting picking and editing them by changing their location, rotation, and scale.

Grabbing object from GUI

Rotating Object

Scaling Object

The lighting in the scene could also be edited; for directional light, its color and direction could be changed; for point light, its color and position could be changed.

Editing the direction of directional light

When finished editing, the scene setting could be saved into a .json file and reloaded for the next time.

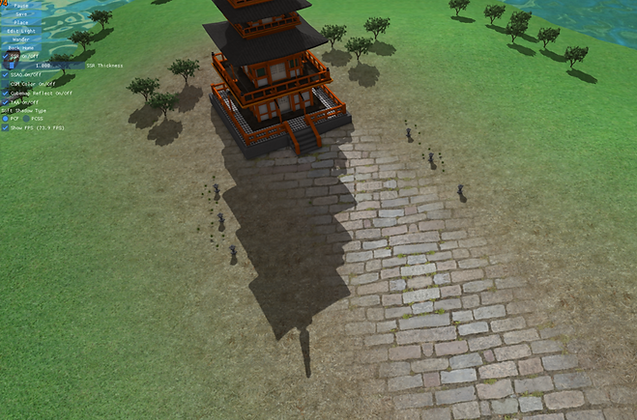

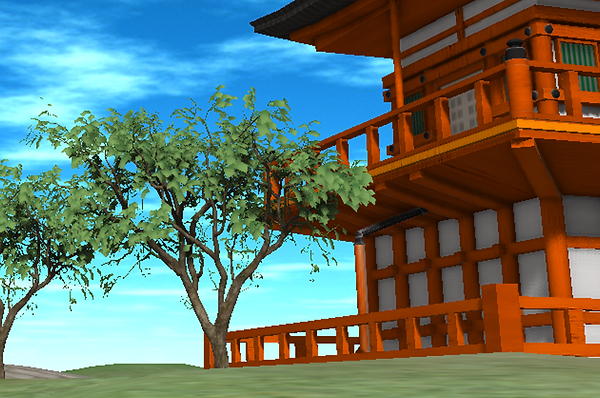

Forward rendering

Forward rendering is relatively simple. Applying blinn-phong's model, we can get a naive mimic of lighting on objects (but not very realistic).

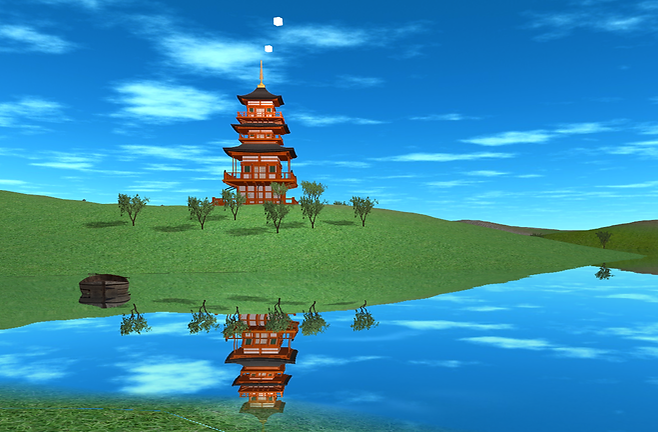

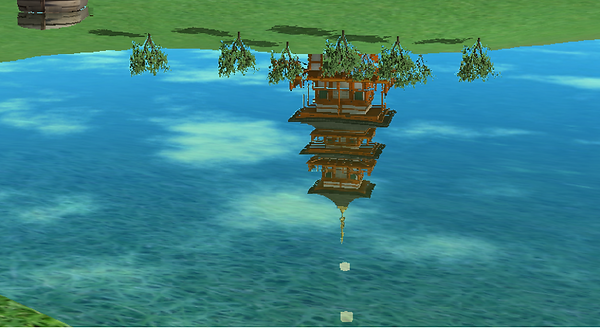

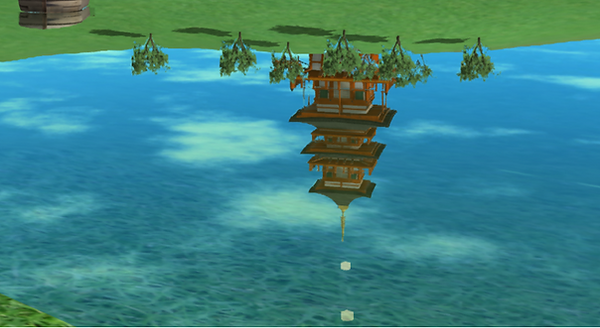

When dealing with water reflection, we render a plane on which the reflected texture is applied. The reflection texture is generated by changing the camera's position and view to the reflected position and view, and then rendering the scene. After this texture was created, we applied a normal map and a distortion map to mimic highlight reflection and natural reflecting distortion.

The final rendering result is blended from the reflection and refraction result according to Fresnel's law.

Shadow for directional light in the forward rendering pipeline is direct. That is to render a depth map at a virtual position of directional light (calculated by the current camera position). Then render object fragment position is transformed into light space and used to get the depth from the light. After the lightviewed depth and real depth are compared, we can know whether this fragment is occluded by some object. And we can apply a range average to alleviate shadow aliasing.

Deferred rendering

Deferred shading is, however, a little tricky, for it requires an elaborate design of the pipeline. Requires a total overhaul of forward rendering code. However, it could achieve far more than forward rendering could do.

This time I intended to implement some really useful rendering features for modern games: Cascade Shadow Map, Screen space effects, percentage close soft shadow, real-time water caustic calculation, and temporal anti-aliasing.

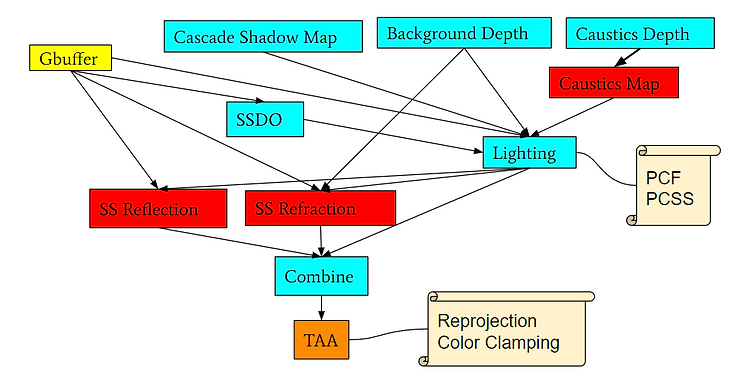

Here is an overview of my pipeline.

Deferred pipeline overview

A deferred rendering pipeline is generally considered to be made of two parts: Geometry pass and rendering pass. But the pipeline shown here is a little convoluted because as we keep adding features to it. Basically, the information preparation pass includes shadow map generation, Gbuffer generation and others. After we get everything we need, we can start actual rendering.

Cascade Shadow Map

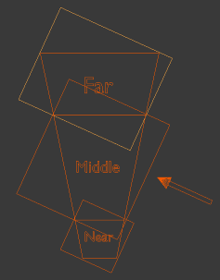

CSM is an important technique to save GPU memory bandwidth and allow more precision shadow calculation in the near scene.

The method is simply to calculate every level of the shadow map to cover a certain range of view frustum.

CSM Level size could be set to approximate logarithmic texel density distribution.

Left: CSM diagram Right: CSM Visualization in our project

Soft shadow approximation

Compared to range search method(PCF), PCSS relies more on physics and thus provides us with a more realistic shadow.

PCSS requires 4 steps of computation:

-

calculate depth search size

-

calculate avg blocker distance

-

calculate penumbra

-

perform traditional PCF

Here is a comparision between PCF and PCSS. We can see PCSS outruns PCF because the further the shadow extends the softer it will be, and this is true according to our real life experience

PCF Result

PCSS Result

SSAO with indirect lighting

Screen-Space Ambient Occlusion: approximately calculate AO term with Gbuffer, make it able to calculate AO in real time.

The basic method is to sample in a hemisphere according to the normal direction of a fragment, and compare the sampled depth with real depth in buffer.

We can learn from SSDO technique, that a "blocked" sample point may mean indirect lighting from that position. And use the color from the blocked point to get a feeling of indirect lighting.

Here is a comparision of rendering without SSAO and with it. We can clearly see the overall quality is greatly improved by this technique and there is a little hint of, though not salient, indirect light bounce.

AO off

AO on

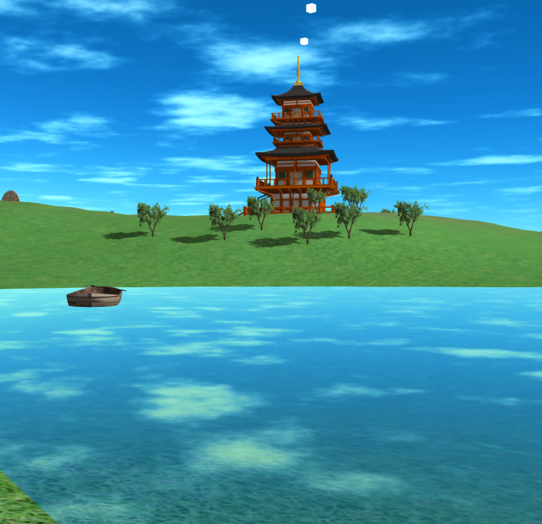

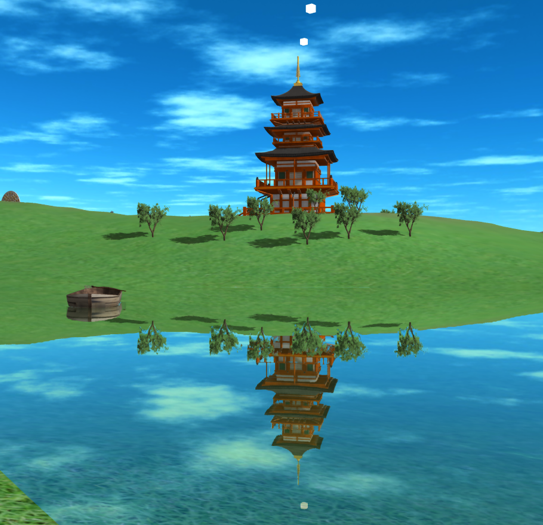

Screen Space Reflection/Refraction

SSR is an effective way to calculate real reflection on a irregular surface (reflecting camera's position and view can only render planer reflection).

It uses fragment infos in GBuffer (position, normals)

At each screen space fragment, march reflected/refracted ray in screen space

However it suffers from very noisy result, and the further the raymarching, the more imprecision result it will yield. And it could be alleviated by using Hi-Z querying or TAA.

In reality, we use two reflection method combined to get a desired outcome, because the bias of ray marching to hit the sky is simply to large. The first is simply calculate the reflection according to the skybox, and the other is SSR for reflection which is relatively near in the scene.

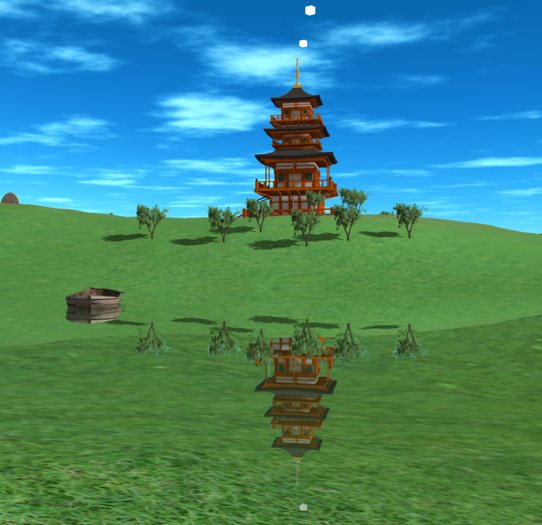

Here are our rendering result(To clearly see the result of reflection, we've set the water to a plane, instead of a curvy surface.)

SSR Only

Cubemap Only

Combined Result

Realtime water caustics

Caustics are HARD to compute

We can approximate it using similar methods in shadow mapping and SSR

-

get depth map of the environment (terrain)

-

ray marching from every water mesh

-

compute area ratio of before and after refract

Caustics Result (With Reflection and Refraction off)

Temporal AA

MSAA is HARD on deferred shading pipeline, since lighting pass has no geometry info about the scene (only render a full screen quad)

We can mix pixels over time instead of space

Add jitter to each frame -> Accumulate frames over time

Temporal AA works like a charm with many screen space effects such as: SSAO, SSR, etc.

Because screen space calculation tend to incur a lot of imprecision and noise. Add jitter to projection matrix and mix them in a time-series manner can effectively reduce these noise. I was very impressed by TAA, because without it, my rendering result appears to be crude and unrefined.

TAA off

TAA on

TAA off

TAA on

Project Dependency

OpenGL: GLFW GLAD

Model loading library: assimp

GUI library: imgui

Image Loading: stb_image

Features to be implemented

PBR material support for model

Optimize SSR with hierarchical depth buffer

And other cool stuff!